What are Impact Evaluations?

Impact Evaluations

Impact Evaluations are scientifically robust evaluations using complex sampling and statistical tools. They are the most robus method for determining impact of a project or program.

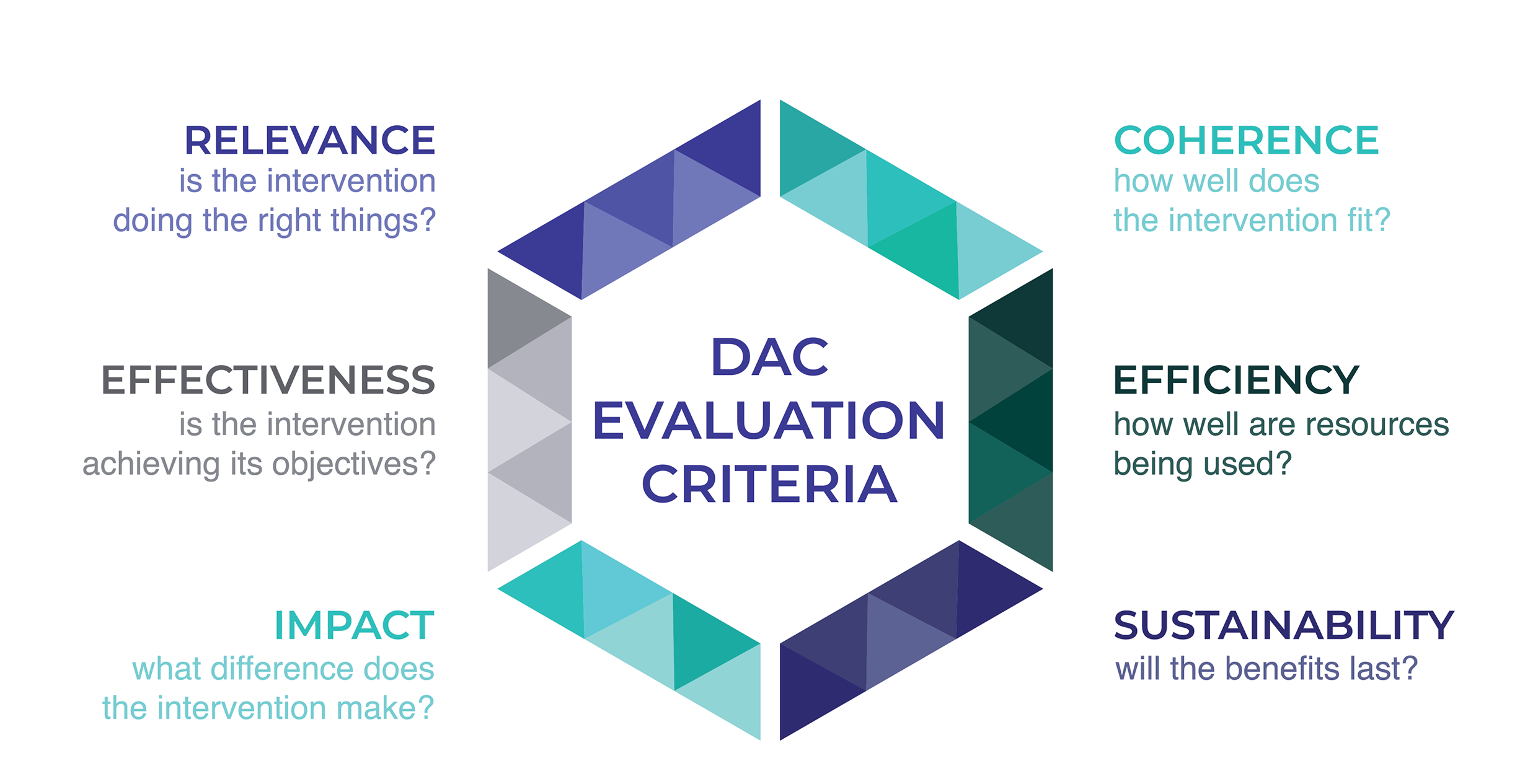

Types of Evaluations

When evaluating a project or program there are a number of options to choose from:

⇒ Process Evaluation

⇒ Participatory Evaluation

⇒ Impact Evaluation

⇒ Performance Evaluation

⇒ Implementing Partner Evaluation

The above design approaches can be used Formatively (at the design and development stage of the program for example with a baseline assessment), and/or Summatively (at the conclusion of the program where an end-line evaluation is conducted.

Because each of the above design approaches achieve different types of verification, they can also be conducted together. For example, one monitoring, evaluation and learning (MEAL) plan can contain a Process Evaluation, a Performance Evaluation and an Impact Evaluation.

Impact Evaluation Benefits

Impact Evaluations provide two major benefits.

The first is that they provide robust statistical confirmation (or not) that the program has achieved the intended impact.

This provides confidence that the work that you are doing is having real impact for the communities you are serving.

The second major benefit is that this also allows implementers and donors to scale successful programs with confidence.

Process Evaluations

Process Evaluations are used to determine if the program is being implementing as agreed. For example, in a health project to check that the health commodities are being distributed in the manner, to the groups, according to the timeline and in the condition agreed.

It verifies that activities happened, not the impact of those activities.

Participatory Evaluation

Participatory Evaluations determine whether the beneficiaries have been involved throughout the program’s design and implementation. Usually Participatory Evaluations are used on capacity building projects to determine if the capabilities of the beneficiaries has been increased.

Performance Evaluations

Performance Evaluations aim to build a strong empiracle case for whether the program has achieved its goals. Performance Evaluations are less robust and less defensible than an Impact Evaluation and will rarely be able to make claims about impact or outcomes.

Performance Evaluations are more common than Impact Evaluations because they are much easier to conduct.

Impact Evaluation Key Concepts

Impact

We define impact as the ultimate goal of the program.

Attribution

Impact Evaluations aim to determine attribution.

Attribution is the extent to which the observable change is a result of the project.

Another way to express this is… How much better is a beneficiary who has participated in the program?

The key is to determine the program’s contribution to this. For example, if a change in income is USD100, then how much of that increased income has been brought about by the project?

Counterfactual

One of the main design reasons for why Impact Evaluations are different to Performance Evaluations is because Impact evaluations use Counterfactuals.

The purpose of a Counterfactual is to determine what would have happened if the program had not been implemented (the Counterfactual). This is the core of the Impact Evaluation – it seeks to compare the impact of the program against what would have happened had it not been implemented.

There are two approaches to determining the Counterfactual – either Quasi Experimental Design or an Experimental Design, also known as a Randomised Control Trial (RCT). All Impact Evaluations use one of these.

Sampling

Sampling for Impact Evaluations is a very complex process involving the use of statistics and depending on the type of Impact Evaluation, ca use Precision Based Sample Calculations or Comparative Power-Based Sample Calculations.

Types of Impact Evaluation

Experimental Design / Randomised Control Trial (RCT)

RCTs are compare to groups (a treatment group and a control group) that are randomly selected from the target population. This can be done using Simple Random Assignment/Selection, Cluster Randomisation, Phased-in Selection or Random Sampling.

These two groups need to be as similar as possible and because of this managing the Spillover Effect is very difficult.

Because of this, RCTs need to be built into the design of the program from the very beginning. They cannot be implemented retrospectively.

RCTs require a list of the eligible population and then draw on large sample sizes from this eligible population.

ESDO will help partners design RCTs into their programs and advise what is possible from an Effect Size and Confidence Level. This will inform the sample sizes and data collection effort required.

RCTs are the gold standard for evidence of impact. If implemented correctly, at the end of the program ESDO will be able to determine the attribution and impact of the program to an agreed confidence level.

This can then be used as evidence to either scale or improve program design.

Quasi Experimental Design

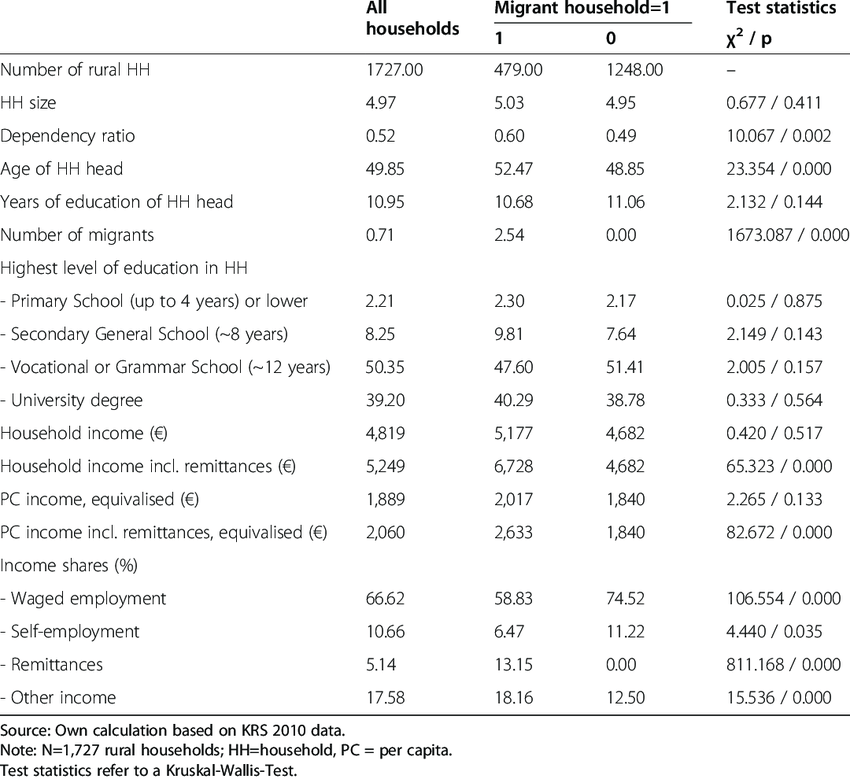

Quasi Experimental Design (QED) uses a non-random technique for assigning treatment and control groups.

QED PSM analysis of beneficiary migrant households in Europe

⇒ Sufficient comparable data is available from the non-treatment group from baseline and end-line quantitative studies.

⇒ The comparison groups are very similar.

⇒ The eligible population is large enough.

QED can be conducted using either Propensity Score Matching (PSM), Difference in Difference (DiD) or Regression Discontinuity. ESDO will advise which method is the most appropriate in your situation.

We care deeply that all our work is

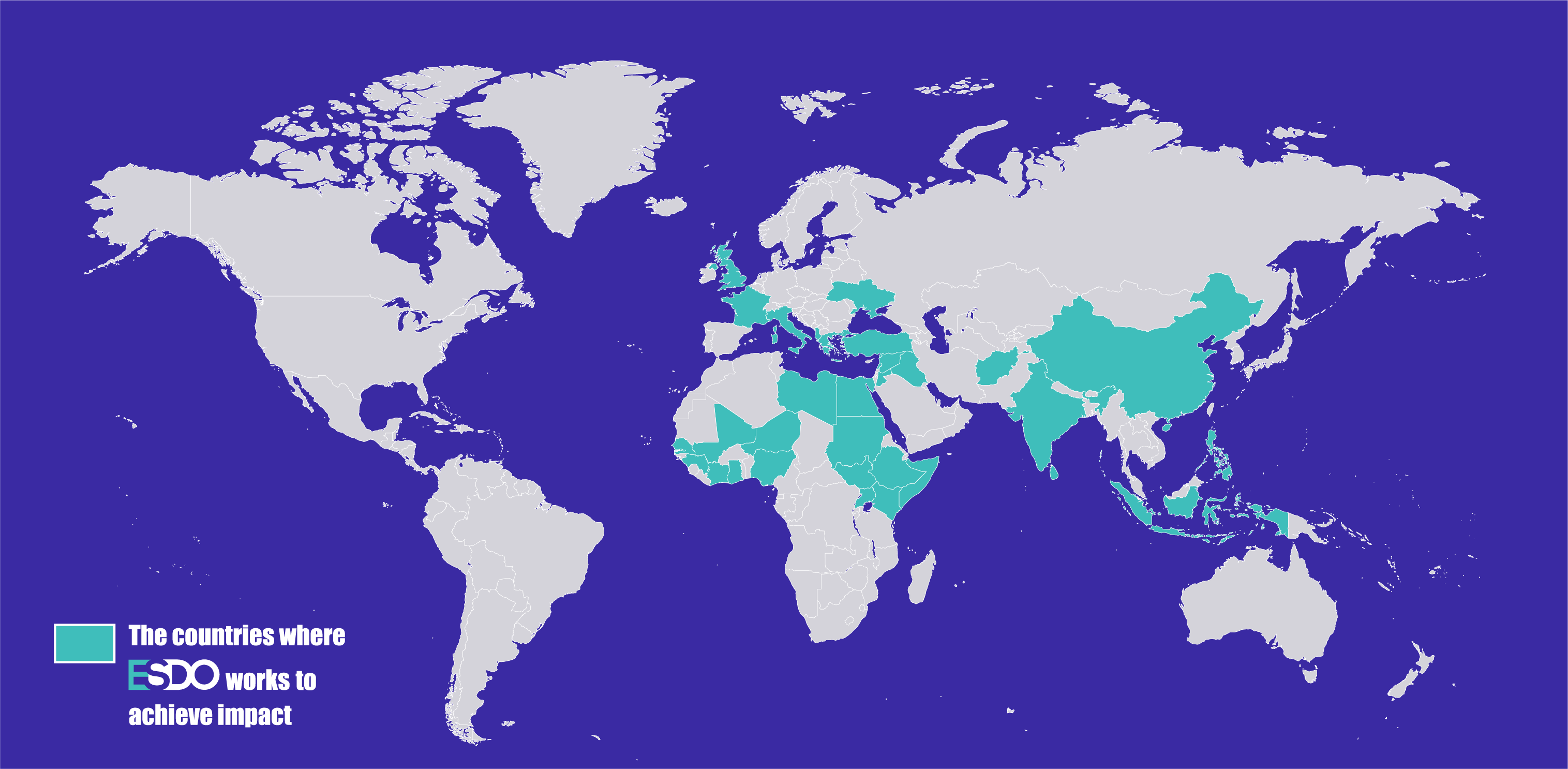

Where We Work